Linguistic analysis of my PhD thesis

Image credit: Olaf Lipinski

Image credit: Olaf LipinskiTable of Contents

Analysing my PhD thesis with Python

I recently saw someone post about the statistics of their PhD thesis, and I thought I could join in on the fun, but with an emergent communication twist! I will analyse my own thesis with some linguistic-inspired metrics and see what interesting patterns emerge.

In this post I will:

- Start with some simple overall stats

- I use readability score calculations from textstat and textblob

- For more explanation on these metrics, visit readability formulas and textblob documentation

- I also use SpaCy for analysing other parts of the text

- For explanation on SpaCy, visit their documentation

- I use readability score calculations from textstat and textblob

- Analyse the thesis section by section

- This is very interesting in my opinion!

- You can see all the metrics change significantly across different sections

- Compare my work to Shakespeare’s writing

- Just for fun, to see how we stack up!

I use several readability metrics including “Flesch Reading Ease”, “SMOG Index”, “Flesch-Kincaid Grade Level”, and “Automated Readability Index”, which can be summarized as:

- Flesch Reading Ease: Higher scores indicate easier readability (100 is very easy, 0 is very difficult)

- SMOG Index: Estimates the years of education needed to understand a text

- Flesch-Kincaid Grade Level: Corresponds to US grade level required to comprehend the text

- Automated Readability Index: Similar to Flesch-Kincaid, indicates US grade level needed

Click here for readability score breakdowns/ranges!

Readability Score Interpretations

Flesch Reading Ease:

- 90-100: Age 11 (UK Year 6/US 5th grade) - very easy

- 80-90: Age 12 (UK Year 7/US 6th grade) - easy

- 70-80: Age 13 (UK Year 8/US 7th grade) - fairly easy

- 60-70: Ages 14-15 (UK Years 9-10/US 8th-9th grade) - plain English

- 50-60: Ages 16-18 (UK Years 11-13/US 10th-12th grade) - fairly difficult

- 30-50: University level (difficult)

- 0-30: Postgraduate level (very difficult)

SMOG Index:

- 6-10: Up to GCSE level (UK)/High school sophomore (US) - accessible to general public

- 11-12: A-level (UK)/High school completion (US)

- 13-16: Undergraduate degree level

- 17+: Postgraduate/professional level

Flesch-Kincaid Grade Level:

- 1-6: Primary school (UK)/Elementary school (US)

- 7-9: Lower secondary (UK Years 7-9)/Junior high (US)

- 10-12: Upper secondary (UK Years 10-13)/High school (US)

- 13-16: Undergraduate level

- 17+: Postgraduate level

Automated Readability Index:

- 1-6: Primary school (UK)/Elementary school (US)

- 7-9: Lower secondary (UK Years 7-9)/Junior high (US)

- 10-12: Upper secondary (UK Years 10-13)/High school (US)

- 13-16: Undergraduate level

- 17+: Postgraduate level

Join me on this journey through my thesis statistics! You can also generate your own analysis, with small modifications required, by using the code linked at the top of this post (feel free to leave a comment/suggest changes on GitHub!).

Overall statistics

Let’s see what we’re working with. These are the stats extracted with the pipeline I used for the plots. Interestingly, we get about 6,000 more words with my pipeline than Overleaf’s word count.

| Metric | Value |

|---|---|

| General Statistics | |

| Total Words | 33,833 |

| Unique Words | 4,753 |

| Lexical Diversity | 0.140 |

| Average Sentence Length | 26.99 |

| Number of Sections | 30 |

| Readability Scores | |

| Flesch Reading Ease | 31.62 |

| SMOG Index | 16.4 |

| Flesch-Kincaid Grade Level | 14.5 |

| Automated Readability Index | 17.2 |

| Top 15 Words | Frequency |

| temporal | 326 |

| agents | 284 |

| language | 180 |

| message | 168 |

| communication | 162 |

| messages | 151 |

| emergent | 145 |

| agent | 131 |

| references | 120 |

| trg | 112 |

| integer | 111 |

| compositional | 101 |

| sec | 100 |

| target | 95 |

| ngram | 89 |

Distributions

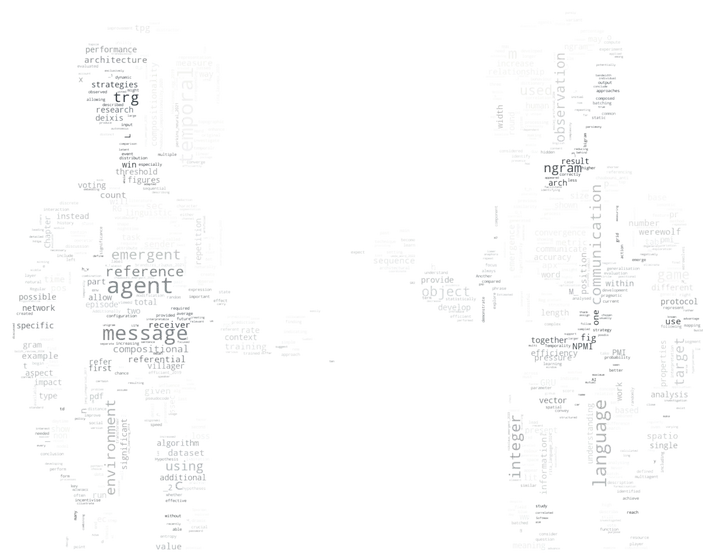

I also plotted the word distributions in a nice histogram, which some might find easier to read than a table.

Using SpaCy, I could also identify different parts of speech used in my thesis! As you can see from my distribution chart, my thesis is heavy on nouns, which is typical for academic writing - lots of concepts and things to discuss!

For a quick overview of parts of speech terminology, click here!

Parts of Speech Explained

When I analyzed my thesis with SpaCy, it tagged every word with these parts of speech:

- NOUN: Entities, concepts, or objects (e.g., “language,” “agents,” “communication”)

- VERB: Words expressing actions, processes, or states (e.g., “analyze,” “develop,” “communicate”)

- PROPN: Proper nouns referring to specific entities or names (e.g., “Shakespeare,” “Python,” “NeurIPS”)

- ADJ: Adjectives modifying nouns by describing attributes or qualities (e.g., “temporal,” “compositional,” “emergent”)

- NUM: Numerals representing quantities or ordinal positions (e.g., “one,” “third,” “16.4”)

- ADV: Adverbs modifying verbs, adjectives, or other adverbs (e.g., “significantly,” “mostly”)

- PUNCT: Punctuation marks

- X: Miscellaneous category for elements not classified elsewhere

- ADP: Adpositions expressing spatial, temporal, or logical relationships (e.g., “in,” “of,” “through”)

- SYM: Symbols representing concepts rather than linguistic content (e.g., mathematical notation)

- INTJ: Interjections expressing emotional reactions or sentiments

- CCONJ: Coordinating conjunctions connecting equivalent syntactic elements (e.g., “and,” “or,” “but”)

- AUX: Auxiliary verbs supporting the main verb’s tense, mood, or voice (e.g., “is,” “have,” “can”)

- PART: Particles fulfilling grammatical functions without clear lexical meaning (e.g., “to” in infinitives, “not”)

- PRON: Pronouns substituting for nouns or noun phrases (e.g., “I,” “they,” “this”)

- SCONJ: Subordinating conjunctions introducing dependent clauses (e.g., “if,” “because,” “while”)

- DET: Determiners specifying referential properties of nouns (e.g., “the,” “a,” “these”)

The proportions of these different word types help characterize my writing style and differentiate technical sections from more accessible ones.

Section by section

Let’s start with a simple word count per section. As expected, my literature review is the largest section of all. The other significant spikes correspond to experimental sections and, my favorite, the appendices! My supervisors often commented that I have a tendency to pack the appendices with additional data.

You can also see the word frequency per section, per 1,000 words in heatmap format.

Next, let’s examine how the most frequently used words vary across sections. As expected, “temporal” peaks in the experimental chapters of my thesis where I discuss temporality extensively!

Now, let’s look at the sentiment analysis per section. Interestingly, my chapter on the game of Werewolf appears to be more polarizing and subjective. This could be due to the frequent use of words like “villagers” and “voting,” which sentiment algorithms might associate with political discourse.

We can also examine lexical diversity across the thesis. These metrics may be slightly skewed due to incomplete text sanitization. It’s interesting to see that lexical diversity peaks in the introduction of the chapter based on my NeurIPS paper.

Click for an explanation of lexical diversity!

Lexical Diversity

Lexical diversity measures how varied your vocabulary is - basically, are you using the same words over and over, or changing it up? The simplest way to calculate this is by dividing unique words by total words. My thesis scored 0.140, which means about 14% of all words are unique. This might seem low, but academic writing typically scores lower than fiction because we keep using the same technical terms (like how “temporal” appears 326 times in my thesis!). Different sections have different diversity scores depending on whether they are introducing broad concepts or diving into technical details.

In this post, I use three measures of lexical diversity:

TTR (Type-Token Ratio): This is that simple ratio I mentioned (unique words divided by total words). While straightforward, TTR has a major drawback - it decreases as text length increases, since you naturally run out of new words to use as you write more.

Moving Window TTR: This addresses the text length problem by calculating TTR within fixed-size windows (say, 100 words each) and then averaging the results. This gives a more consistent measure across texts of different lengths, which is important when comparing sections that vary in size.

MTLD (Measure of Textual Lexical Diversity): This is a more advanced metric that calculates how many times the TTR drops below a threshold (usually 0.72) when moving through the text. MTLD gives us a factor score indicating how many times we can divide the text while maintaining that TTR threshold. Higher MTLD values indicate greater lexical diversity. This metric is much less sensitive to text length than raw TTR, making it great for comparing my thesis sections, which range from brief introductions to lengthy literature reviews.

In my analysis, I’ve used a combination of these metrics to get a comprehensive view of how my vocabulary varies throughout different thesis sections. The spikes in lexical diversity often correlate with sections where I’m introducing new concepts or discussing broader implications, whereas the more technical sections tend to reuse the same terminology consistently (which is actually good practice in academic writing - consistency is key!).

We can quantify the readability of each section using established metrics. Again, the values may be slightly inflated due to text sanitization issues, but I’m pleased to see that the conclusions and introduction score well on readability, as these should be the most accessible sections. The technical sections score lower on readability, as expected, likely due to the large number of technical jargon words.

Academic-ness of my writing

Let’s examine some common academic writing patterns: passive voice usage, sentence length, lexical diversity, and first-person pronouns. As expected, we can see that technical sections tend to use more passive voice. Interestingly, my architecture descriptions appear to have notably shorter sentences!

I also decided (with help from Claude) to analyze whether I overuse specific “academic” terms, including:

- “analysis”

- “research”

- “data”

- “method”

- “results”

- “theory”

- “approach”

- “study”

- “model”

- “framework”

It’s interesting to see that I apparently use “method” frequently in the conclusions of my NeurIPS paper. However, this observation comes again with the caveat that shorter text sections can produce less reliable frequencies.

Comparing my writing with Shakespeare

I thought it might be interesting to compare the statistics from my thesis to Shakespeare’s writing. Fortunately, Andrej Karpathy has compiled a tiny Shakespeare dataset, which I used for this comparison.

Let’s start with sentiment analysis. I’m pleased to see that my thesis is both less polarizing and less subjective than Shakespeare’s works—suggesting at least some degree of scientific rigor!

We can also see that my sentences are somewhat longer, and my passive voice usage is significantly higher than Shakespeare’s.

Somewhat surprisingly, my thesis is less readable than Shakespeare according to all metrics. (Note that for Flesch Reading Ease, higher scores indicate better readability, while for the other metrics, lower scores are better.) This is rather humbling, considering Shakespeare still uses archaic terms like “thou” which aren’t particularly accessible to modern readers. But then again, he was a master playwright after all…

At least I can claim slightly higher lexical diversity! Though I’m concerned this might also be influenced by LaTeX commands that weren’t fully removed during text sanitization.

Finally, let’s examine the top words used by Shakespeare and myself. Unsurprisingly, there’s virtually no overlap. Emergent communication wasn’t particularly popular in the late 16th and early 17th centuries when Shakespeare was writing.

Summary

While this post is primarily for fun, I think it nicely showcases a few important points. First, text sanitization is surprisingly challenging and time-consuming—I now have much greater appreciation for data preparation specialists. Second, there are numerous fascinating approaches to analyzing text and, by extension, language! Exploring these different metrics and seeing how they compare has been super fun. This kind of analysis is precisely what made me pursue emergent communication and linguistics in my PhD, and I hope I’ve managed to convey some of that enthusiasm to you, dear reader!